Epic Talks Samaritan & The Future Of Graphics

Note that this is an archived copy of the original article. Please see here for a more detailed explanation.

By Andrew Burnes on Tue, May 10, 2011

At this year’s Game Developers Conference, attendees were wowed by Epic Games’ Samaritan presentation, a sneak peak at the visual fidelity achievable with Unreal Engine 3 and the horsepower of three NVIDIA GeForce GTX 580 graphics cards. Offering a world first look at a real-time demonstration constructed entirely in DirectX 11, Epic’s Samaritan demo set tongues wagging, and served as a wake-up call for those in the industry still languishing in the dark ages of DirectX 9.

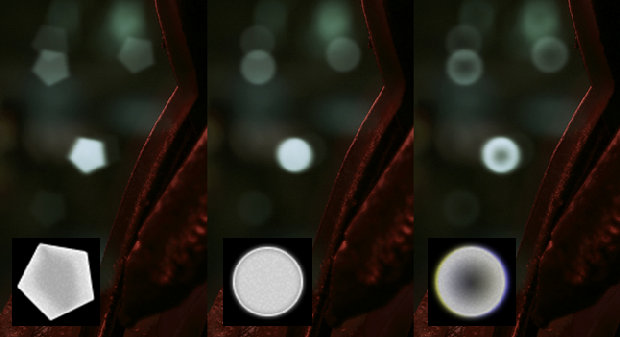

In the opening frames of the demo, one of the most talked-about advancements is shown. Known as Bokeh Depth of Field, it is an upgrade to the standard out-of-focus blurring seen in games of recent years, but has in fact existed for over a decade, having been demonstrated by the now-defunct 3dfx using their famed Voodoo T-Buffer in 1999. The Bokeh component of the new technology relates to the out-of-focus, definable shapes seen in television and film, which are typically used to enhance the mood or visual quality of a scene. Derived from the Japanese word "boke," which literally means "blur," Epic Games’ Martin Mittring believes that the effect is far more than mere obfuscation, in that "it allows you to lead the viewer’s attention and creates depth," an effect that is "very important for storytelling, which is an integral part of today’s games." Mittring, Epic Games’ Senior Graphics Architect, also believes that "this effect can be distracting," but, "in some situations it can be used to improve aesthetic quality, like when aiming through a weapon."

The first frames of the Samaritan demo immediately show Bokeh Depth of Field effects to the left of the Epic Games logo.

The main use of Bokeh Depth of Field will be for cut scenes, where developers can predefine the shape of the virtual camera’s lens, which dictates the shape of the blurred objects, as seen in the example below. Mittring is happy to state that Epic’s new Bokeh method "runs quite fast on modern graphics cards," but is equally willing to admit that there’s some way to go to before matching the filmic effect mastered by Hollywood’s best and brightest over several decades. To attain "better interaction with other features like translucency, proper occlusion and motion blur," Mittring and his colleagues will require more powerful hardware and better software development tools, but for the time being those are some ways off.

Three examples of the artist-defined Bokeh effects available for use in Unreal Engine 3.

An example of the Bokeh effect, as seen in the Samaritan demo.

The technology for the Samaritan demo took eight months in itself to develop and implement, work having first begun in June 2010, when NVIDIA Developer Technology Engineer Bryan Dudash and his team initiated development of Epic’s Unreal Engine 3 DirectX 11 RHI graphics wrapper code, which in layman’s terms allows the Unreal Engine to output anything that utilises the advanced features of DirectX 11. Epic’s engine team pre-planned and implemented the DirectX 11 features, and then commenced development of the actual Samaritan demonstration, which had been storyboarded and meticulously prepared in advance. "For the final stages of the demo’s development, NVIDIA engineers performed performance analysis and debug to make sure that the demo made the most efficient use of the GPU power available," adds Dudash, with work continuing apace on all sides, right up until the unveiling of the Samaritan demo on March 2nd, 2011.

An early storyboard mapping out the Samaritan scenes and the technologies they should highlight.

As already mentioned, the demonstration ran in real-time on a 3-Way SLI GeForce GTX 580 system, but even with the raw power that configuration affords, technological boundaries were still an issue, and for that reason, Daniel Wright, a Graphics Programmer at Epic, felt that "having access to the amazingly talented engineers at NVIDIA’s development assistance centre helped Epic push further into the intricacies of what NVIDIA’s graphics cards could do and get the best performance possible out of them." Being a tightly controlled demo, Samaritan doesn’t include artificial intelligence and other overheads of an actual, on-market game, but with enough time and effort, could the Samaritan demo run on just one graphics card, the most common configuration in gaming computers? Epic’s Mittring believes so, but "with Samaritan, we wanted to explore what we could do with DirectX 11, so using SLI saved time."

Early Samaritan concept art for a visual style not used, though elements did transfer to the final demo. Note the billowing coat, the use of shadow, and the foreboding darkness that manifests itself as a corrupt police force in the final demo.

In all likelihood, a game of equal fidelity won’t be a reality until the next generation of graphics cards, but in a chicken and the egg situation, how can hardware be developed for the next generation of graphical technology when it has yet to be programmed? For Samaritan, SLI allowed to everyone to bypass this dilemma, utilising the raw power of the three graphics cards with an ample helping of brute force. For NVIDIA, the collaboration with Epic was "the definition of a win-win," as Dudash puts it. "Working with Epic was an amazing experience. Their engineers are at the forefront of graphics knowledge in the industry and they have some of the top artist talent out there."

"Through the course of making a challenging and technologically advanced demo like Samaritan," continues Dudash, "we learnt a lot about the types of features that are useful, and which are difficult to use. Additionally, we found the limitations of the current APIs and feature sets, both in hardware and software." This newly acquired wisdom, "will work its way into our future hardware designs, as well as driver updates." Concluding, NVIDIA’s Developer Technology Engineer has some complimentary comments for Epic: "It's been a real pleasure working with them on this demo. I can't wait to see what they come up with next."

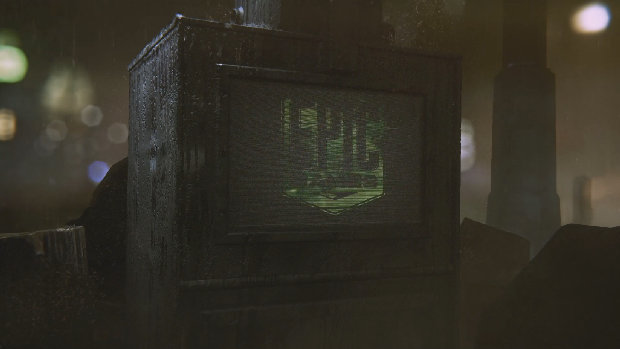

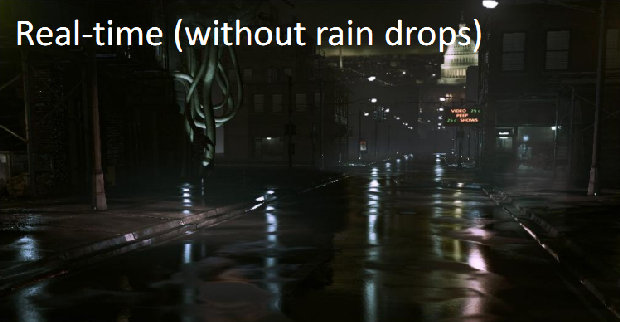

As the Samaritan demo transitions into a street scene, looking down to the Washington D.C. Capitol building, several of the DirectX 11 features combine to create an unprecedented level of detail and immersion. The element that draws the eye first is that of the wet street and sidewalk, featuring as it does, numerous textures, elevation changes, and light sources.

The opening scene of the Samaritan demo, showing Point Light Reflections, billboards, and many other technologies.

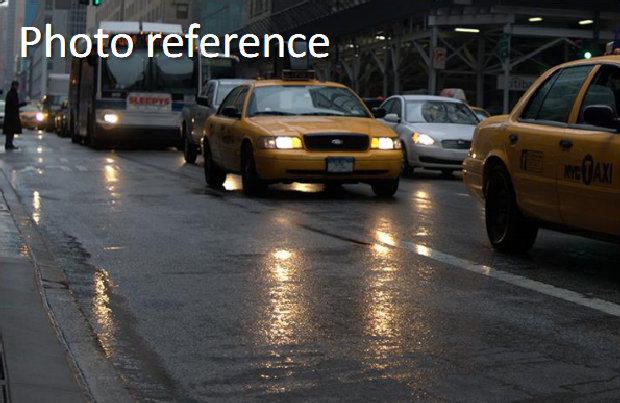

Eschewing traditional Phong and Blinn-Phong techniques, which were unable to produce the desired, reflective look, Epic’s new specular, Point Light Reflections are as close to ‘real’ as current technology allows, as exemplified by the following photo reference and in-engine comparison.

A real world example of the aforementioned techniques. The real-time result (below), features a smoother street surface that can reflect the background detail and surroundings in an aesthetically pleasing manner that would be otherwise impossible if the real world example were emulated exactly.

The in-game result. Note the breaks in the reflected light from the non-uniform surfaces.

The point lights referenced in the technology’s title are the headlights and streetlights, and to emulate the street scene in the reference photo, a multitude of different textures are utilized, as is non-uniform road, concrete and pavement placement, allowing reflections to be interrupted, manipulated, and occluded by static and dynamic objects, whether they be street furniture or moving vehicles. As Epic’s Mittring points out, the "wet street scene is dominated by reflections, and bright objects appear to be mirrored everywhere." To achieve this, other technologies are layered into the scene, and together they are collectively known as Image Based Reflections.

In addition, Samaritan introduces Billboard Reflections, created through the use of a textured billboard and accurate lighting to replicate the board’s design. These billboards can be placed like any other object in the Unreal Engine editor and the light they emit can be reflected upon any surface, accurately, with relative ease. These reflections can then be shown in detail, or blurred for extra, dramatic effect, and multiple billboards can be introduced, with their lighting combining accurately, or being occluded by the brighter source. Though seemingly insignificant, this billboard technology is a dramatic improvement over existing reflection techniques, and the methods it employs can be built upon in the future to light and reflect entire scenes.

A billboard’s light can be accurately reflected off of multiple surfaces, or tweaked by the designer to create a specific effect. Glossiness is affected by the material’s specular power and its distance from the light source, which in this example results in the wet floor appearing brighter than the nearby wall.

Three examples of the same scene manipulated using isotropic reflections and varying levels of blur.

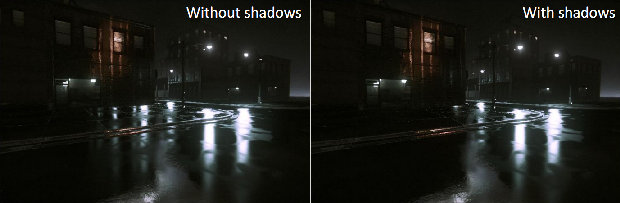

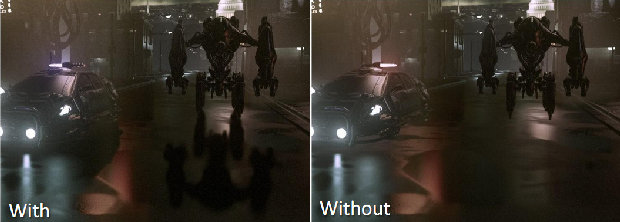

To ensure that this advanced lighting does not clip or leak through objects and geometry in a scene in an unrealistic fashion, Static Reflection Shadows are created when developers compile and render their levels, and to guarantee the same result on moving objects, such as the police car in the Samaritan demo, Dynamic Reflection Shadows are generated in real-time from the position of the camera or character’s viewpoint.

An example of Static Reflection Shadows, used to prevent light leakage as seen on the leftmost image.

An example of Dynamic Reflection Shadows, grounding the robot and car to the terrain, whereas on the right, without DRS, they appear to float.

Such is the importance of Image Based Reflections, and the effect that they have on the fidelity of a scene, it comes as no surprise that they are, "the most expensive parts of the demo," as confirmed by Mittring. Of the collective group of effects, Billboard Reflections, and their occlusion and shadowing, are singled out as the most costly, performance-intensive effects in the entire Samaritan demo – a small price to pay for a significant technological upgrade.

Epic’s commonly-used ‘Powered by Unreal Technology’ motif and slogan, rendered as a billboard in the Samaritan demo. Note the reflections on the surfaces and objects to the right.

Balancing out the cost of Image Based Reflections is a DirectX 11 lighting technique called Deferred Shading. In Unreal Engine 3 titles developed using DirectX 9 and DirectX 10, the lighting method used is called Forward Shading, due to the dynamic lighting calculations being crunched in advance while other elements of a scene are rendered. With Deferred Shading, geometry rendering is handled separately and the lighting calculations broken down into smaller components that are written to the graphics buffers and combined later in a ‘deferred pass’ when required, instead of writing the result immediately to the frame buffer. The result is that the computational power and memory bandwidth required to accurately light the scene is reduced, and by only shading visible portions and not those obscured by buildings or other objects, the complexity of the scene is reduced and frame rate is increased.

According to Epic’s Mittring, Deferred Shading "has a higher performance cost for simple scenes, but with complex geometry and many lights the method pays off. As we are limited by what can be done in a small fraction of a second, we get more geometry detail with more lights." Indeed, in the opening street scene of the Samaritan demo, one hundred and twenty-three dynamic lights are visible, and through the use of Deferred Shading performance is ten times faster in comparison to the DirectX 9 Forward Shading of old.

The one hundred and twenty-three deferred lights used in the opening scene of the Samaritan demo. Note the removal of the police car in the right lane of the road, leaving only its dynamic red and blue lights.

Previous

Previous

Comments

This comment section is currently disabled. Click here to enable comments and load all Disqus related resources

Please note that by enabling and loading these Disqus resources, you are agreeing to allow everything that comes with a Disqus comment section, including their cookies and all scripting.

This comment section is currently enabled. Click here to disable comments and unload all Disqus related resources

Please note that I have absolutely zero control over this comment section. It is still administered entirely by Nvidia themselves. I have no moderation power whatsoever and don't necessarily endorse any of the comments posted here. In fact, I don't believe this comment section adds much value to the article to begin with but included it only because it existed on the original.