Epic Talks Samaritan & The Future Of Graphics - Page 2

Note that this is an archived copy of the original article. Please see here for a more detailed explanation.

Following a close-up of Billboard Lighting, the eponymous Samaritan is seen for the very first time. The first few shots of the protagonist highlight enhanced particle effects, localised motion blurring, and the aforementioned lighting system, but as he raises the blowtorch to his face to light a cigarette, Subsurface Scattering is seen for the first time.

The first glimpse of Subsurface Scattering in Epic’s Samaritan demo.

Designed to tackle the ‘plastic wax’ phenomenon, whereby light hits a character and it simply bounces back, as if the character were made of plastic, Subsurface Scattering renders Forward Lighting as it hits the surface of an object, scatters throughout its interior, and exits the object at a different location. In the terms of the Samaritan demo, light hitting characters’ skin is absorbed, realistically shifted under the skin, and then emitted from a different location. For a real world example, shine a torch through a hand in a dark room, and observe that the light continues through from the other side and illuminates the darkness.

On the left, only diffuse lighting on the face; in the middle, Subsurface Scattering; and on the right, the two combined. Note how the final result has less of a reflective sheen and that the light spreads rather than remaining fixed in place as in the first example.

Subsurface Scattering was an especially important feature for Epic to implement as the human eye is trained to detect details in faces and objects for the instant recognition of threats and emotions, and research shows that the greatest barrier to in-game photorealism is the rendering of a face, and though that goes beyond lighting and modelling to include mannerisms, eye and mouth movement, and more, Epic’s advancement moves us a step closer to scaling the Uncanny Valley. There is room for improvement, however, as Epic readily admits, as technological limitations restrict the number of layers of skin light can penetrate, and also prevents light scattered from one from source being absorbed by another.

The blowtorch scene and subsequent close-up also highlights another major advancement, that of realistic hair. Something that games have struggled with since the first use of polygons, hair is typically rendered as a solid block with a few strands rendered separately, or more recently in big-budget games as multiple, thick, non-transparent layers.

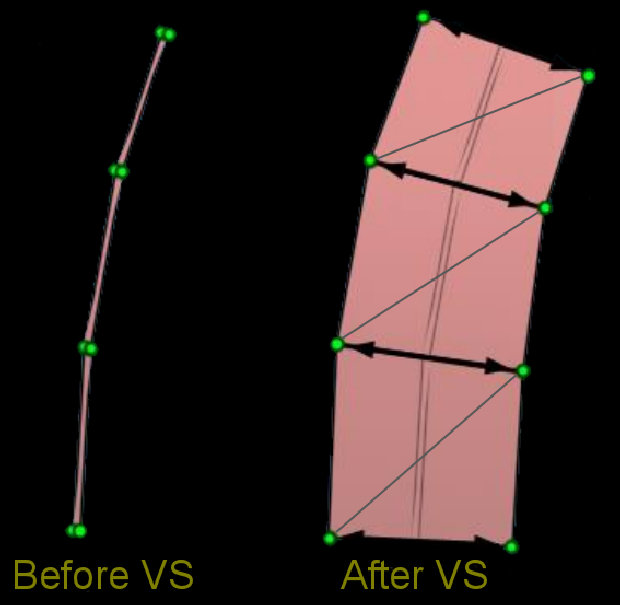

To tackle this problem, Epic has created an innovative solution based upon existing mesh skinning techniques, using Camera Aligned Triangle Strips. The Vertices in these very thin triangle strips are expanded using the Vertex Shader, so 5,000 splines become 16,000 triangles, and by using a texture containing thirty-six individual hairs, the appearance of realistic hair is created, as well as a realistic beard, as seen on the Samaritan protagonist.

The spline (left), is placed into the Vertex Shader, where it is manipulated to create a Camera Aligned Triangle Strip (right) to place on the character’s head or face. 5,000 splines were manipulated in this manner to create the protagonist’s hair in Samaritan.

The downside of this method is that it creates very jagged, aliased lines on the in-game model, which, at their least dense around the base of the beard, appear unacceptable for a modern game. The solution was to apply four times Multisample Anti-Aliasing to every object in the scene, and then apply a further Supersampling Anti-Aliasing effect to each individual hair on the protagonist’s head and face to create the smooth, realistic appearance seen in the Samaritan demo. "Unfortunately, the memory cost of MSAA is very high," explains Epic’s Mittring. "4x MSAA means five times more texture memory is needed, which hits a deferred renderer even harder." Mittring isn’t kidding – Epic consistently hit the limits the GeForce GTX 580’s memory capacity in the Samaritan demo, "1.5GB." But as can be seen in the magnified example below, the end-result is worth it, creating the most realistic hair ever seen in a game.

The addition of Supersampling Anti-Aliasing (right) results in a remarkable improvement in visual fidelity.

The final output, as seen in the Samaritan demo.

Epic’s use of individual hair strands has a secondary advantage, in that light can penetrate the hair and dynamically shadow each individual strand, and that said light can then penetrate the scalp and facial tissue through the use of the aforementioned Subsurface Scattering.

As the Samaritan demo progresses, the protagonist’s attention is caught by an altercation on the sidewalk below, where the futuristic police force of Washington D.C. are brutally assaulting a denizen of the streets. Before intervening, his skin unexpectedly and seamlessly transforms into a metallic or stone-armoured exoskeleton in the demo’s most visually impressive scene. Whereas past effects were subtle, or simply added to the level of immersion, this transformation is front and centre, and wows with its fluidity.

The Samaritan’s transformation.

This transformation was achieved through the use of Tessellation, a key feature in the latest NVIDIA graphics cards, and for Epic’s Mittring, "it was interesting to play with." Tessellation dynamically creates new detail on a character without an additional, second character model being loaded into the scene and the old, original model swapped out. To produce this additional detail the model is tessellated, to create extra polygons that can be manipulated, and then a pre-made Displacement Map tells the model where and how to place those extra polygons, as shown in the example below.

The three different stages of transformation, each dictated by the displacement map, but rendered organically by the GPU’s Tessellators.

In the Samaritan demo, the protagonist’s face "blends between three different stages that adjust the shading and displacement over time," explains Mittring, and to "expose the details of the texture used in the displacement map, we used higher tessellation levels." NVIDIA’s Dudash continues, stating that the tessellation was achieved through the use of the Flat method, which is the fastest, "because we don’t do complex math for splines or other elements," and that the level of tessellation was set to factor six when he transforms.

"This works for the demo," explains Dudash, "but in a normal game situation, you will want to use dynamic tessellation to reduce the tessellation factor when objects get farther away from the camera, or are not seen. In Unreal Engine 3, enhancements will be added that enable this dynamic tessellation," which will help to dramatically improve performance in a scene, and pave the way for full-screen tessellation of every object.

This is the standard Tim Sweeney, Epic’s CEO and Technical Director, sees the industry moving towards over the next however many years.

"I believe the industry has correctly converged on shaded triangles as a low-level surface representation," says Sweeney, the industry’s leading graphics engineer. "Triangles are the most efficient general primitive for representing most scenes and most objects, which are largely opaque and contain well-defined boundaries. With that in mind, though, I think we're well on our way down the REYES path, decomposing higher-order objects via tessellation."

Sweeney refers to Reyes Rendering, which despite being an acronym is only written with the first letter capitalized, according to its co-creator, Robert L. Cook. Created in the mid-1980s by the aforementioned Robert L. Cook, and Loren Carpenter, Renders Everything You Ever Saw is a computer software architecture used in 3D computer graphics to render high-quality photo-realistic images. The company Cook and Carpenter worked at when developing Reyes was Lucasfilm’s Computer Graphics Research Group, now known as Pixar, the film studio behind the world’s most celebrated computer-rendered movies, such as Toy Story and The Incredibles.

Having established that Sweeney wants Pixar-level graphics, he outlines the technology he’d require to achieve such a feat in real-time.

"Give us a sufficiently higher triangle rate to render multiple triangles per pixel, say 10 billion triangles per second, and we'd use tessellation and displacement to dice every object in the scene into sub-pixel triangles -- treating it as a "free" effect, just as texture-mapping became a free effect 14 years ago." NVIDIA’s most powerful graphics card, the GeForce GTX 590, is only capable of 3.2 billion triangles per second, so while Sweeney waits for technology to catch up with his ambition, he’s content to "layer increasingly advanced effects on top of triangle-based scenes, such as volumetric effects, particle systems, post-processing, and so on."

And that’s what Epic did with the Samaritan demo, but for their final, new effect they once again enlisted the services of NVIDIA. "One factor that can differentiate current-gen games and pre-rendered sequences is realistic 'secondary motion' on characters," says James Golding, Senior Engine Programmer at Epic Games. "We knew that with a character wearing such an iconic trench coat, performing acrobatic motions, this was going to be doubly important." And so, as the protagonist jumps off the building and hurtles toward the police below, his coat fights against gravity and the forces of nature, billowing above him, before crashing to a halt on the ground in time with his own arrival.

Samaritan’s hero lands on top of an officer, his coat reacting realistically to the forces experienced.

"Games have certainly been doing cloth simulation for a while," adds Golding, "but given the detail we wanted to put into the graphics of the coat, we needed much more fidelity and control than we had looked at in the past. Having worked with the PhysX team at NVIDIA for many years, we decided this would be a good opportunity for our coders and artists to get to grips with their APEX clothing tech." First seen in a game in August 2010, Clothing is one part of APEX, a set of multi-platform, scalable frameworks that allow artists to create complex, high-level, dynamic systems without any additional programming. Offering Destruction, Particle, Turbulence and Vegetation modules, Epic’s first experiments with APEX were solely focused on the aforementioned Clothing.

"Clothing has some really great tools in the form of Max and Maya plug-ins that allow artists to preview the clothing behaviour right inside their tool," says Golding, adding that "they can then adjust parameters, painting directly onto the simulation mesh, to get the right look." Already built into the Unreal Engine for third-party use, "APEX provides multi-threaded and GPU-accelerated simulation of the clothing mesh and deformation of the graphics mesh," which enabled Epic to, "simulate an extremely high-poly coat model in real time." And as is the way with new technology there are always a few kinks, so it pleased Epic to see that APEX "provides great runtime debugging tools which help us look for issues and fix them quite easily."

The result is a dynamic, realistic coat that moves accurately in time with the protagonist’s motions during the climactic, action-packed scene where all the new DirectX 11 technologies combine to create a wondrous look at the future of real-time computer game graphics. As Tim Sweeney says, "everything shown in the Samaritan demo is intended to showcase both next-generation console possibilities and DirectX 11 on today's high-end PCs," and though he admits, "bits of it – such as cloth and some post-processing effects – are technologically compatible with current-gen consoles," he believes that "the sheer magnitude of its usage in Samaritan goes far beyond."

Apart from the protagonist’s hands and face, all aspects of the scene utilize deferred rendering, resulting in a 10x performance increase.

As Sweeney is keen to point out, "all of these techniques are available to Unreal Engine 3 licensees and can be readily adopted in PC games today," but more excitingly, they are readily available for anyone to use, regardless of their budget or past experience, through the Unreal Development Kit. Asked why Epic adopts such a stance, when others restrict technology to their own studios until the launch of a particular product, Sweeney states that, "given the incredible pace of progress in the industry, software releases cannot afford to be a once-every-few-years phenomenon akin to Windows OS releases. Software must be treated as a living product, continually evolving and always available." As such, Sweeney "wants to share Epic’s latest and greatest stuff with the world." Making his point clear, he says, "From Unreal Engine 3 licensees building triple-A titles, to the UDK developer community, to students learning development at universities, everyone can get their hands on this stuff and check it out."

But what of Unreal Engine 3’s future? Bolting on new bits to an existing framework is rarely the most efficient method of development, primarily because the underlying core structure was never designed with such add-ons in mind, and while Sweeney agrees, he’s not ready to give up on Unreal Engine 3 just yet.

"We're extending Unreal Engine 3 to remain the most powerful and feature-rich engine for the duration of this hardware generation." The next generation will arrive one day, and on that point Sweeney says, "as we move to the next hardware generation -- thinking beyond current DirectX 11 hardware to future consoles and computers with entirely new levels of performance -- we'll combine the latest and greatest of Unreal Engine 3 with significant new systems and simplification and re-architecting of existing systems to become Unreal Engine 4."

Samaritan’s protagonist squares off against an enforcement mech shortly before the scene fades to black.

So there you have it, Unreal Engine 3 is here to stay for the foreseeable future, and the fantastic new technologies outlined in this article will begin to emerge in games in the near future, though the complete package may yet be some way off.

We hope you have enjoyed this informative look into the DirectX 11 technology of Unreal Engine 3, and if you have any comments please feel free to leave them on our forums.

Previous

Previous

Comments

This comment section is currently disabled. Click here to enable comments and load all Disqus related resources

Please note that by enabling and loading these Disqus resources, you are agreeing to allow everything that comes with a Disqus comment section, including their cookies and all scripting.

This comment section is currently enabled. Click here to disable comments and unload all Disqus related resources

Please note that I have absolutely zero control over this comment section. It is still administered entirely by Nvidia themselves. I have no moderation power whatsoever and don't necessarily endorse any of the comments posted here. In fact, I don't believe this comment section adds much value to the article to begin with but included it only because it existed on the original.