This is an archive of the original TweakGuides website, with post-archival notes added in purple text. See here for more details.

The Gamer's Graphics & Display Settings Guide

[Page 6] Graphics Settings - Resolution

Resolution

Display resolution, often simply called Resolution, refers to the total amount of visual information on a display screen. In computer graphics, the smallest unit of graphical information is called a Pixel - a small dot with a particular color and brightness. Every computer image on your screen is a pixellated sample of the original 3D information contained in your graphics card, because that is the way a monitor displays 3D information on a 2D screen surface. When you change the resolution in a game, you are telling the game to provide more or less pixels, and hence more or less samples of that original information for your system to process and display on screen. The higher the resolution, the clearer the image will look, but the more effort this will take for your system to calculate this information, resulting in lower FPS.

The resolution setting in a game or in Windows tells you precisely how many pixels are used to display an image. Resolution is typically shown in pixel width x pixel height format. So a game running at 1920x1200 resolution for example means there are 1,920 x 1,200 = 2,304,000 pixels in total on the screen making up the image. This value can also be expressed in Megapixels (millions of pixels), which in this example would be around 2.3 megapixels. The human eye takes in all these separate pixels and at a reasonable distance perceives them as a single smooth image, much like looking at a picture in a newspaper from a distance as opposed to close up.

To understand the practical aspects of resolution better we need to look at the structure of the types of screens on which pixels are displayed.

Dot Pitch

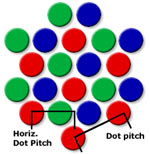

The screen on a traditional CRT monitor is made up of lots of tiny Phosphors. It is these phosphors which glow briefly when struck by the beam from the electron gun inside the monitor and produce the image we see on a CRT screen. In Aperture Grille type CRT monitors, the phosphors are separated into fine red, green and blue vertical strips; in Shadow Mask type CRT monitors, the phosphors are separated into groups of tiny red, green and blue dots.

A pixel may be the smallest unit in computer graphics, but a single pixel on a CRT monitor is still made up of several phosphors. At maximum resolution on a CRT monitor, a single graphics pixel is made up of a 'triad' of phosphors: one red, one green and one blue phosphor. These three are necessary to generate all the required colors of a single pixel.

We refer to the distance between similar-colored phosphors as Dot Pitch, which is measured in millimeters. Effectively, this is a measure of how big the smallest possible pixel would be on a CRT. The lower the dot pitch, the finer the image the CRT display can show, and typically the higher its maximum supported resolution. Most modern CRT monitors have around 0.28 to 0.25mm dot pitch.

Importantly, a CRT displays graphics using a moving electron beam which is manipulated magnetically and projected onto the phosphors on the screen. This along with its large number of phosphors basically means that a CRT monitor can rescale an image to a series of resolutions up to its maximum resolution without any noticeable degradation in image quality. The reason for this is covered further below.

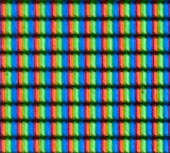

Fixed Pixel Structure

LCD monitors differ from CRT monitors in that they do not have an electron beam or lots of tiny phosphors lighting up. Instead they display images using a grid of fixed square or rectangular Liquid Crystal Cells which twist to allow varying degrees of light to pass through them. This is why they are also known as fixed pixel displays.

Just like a phosphor triad however, each LCD cell has a red, green and blue component to facilitate proper color reproduction for each pixel. Most LCD monitors have now reached the point at which the distance between these cells - their 'Pixel Pitch' - is similar to the Dot Pitch of a traditional CRT. However, because of the way LCDs work and their fixed pixel composition, LCD monitors can only provide optimal image quality at their maximum supported resolution, otherwise known as the Native Resolution. At other resolutions, the image can appear more blurry and exhibit glitches. The reason for this is discussed below.

Analog vs. Digital

The fundamental difference between a CRT and an LCD monitor is that a CRT monitor is based on Analog display technology, while an LCD monitor is a Digital display device. Analog signals are continuous and infinitely variable, whereas digital signals come in discrete steps.

A CRT monitor only accepts analog input. Thus a graphics card's RAMDAC has to convert all the digital image information your graphics card uses to calculate and create an image into analog form before sending it to a CRT monitor. The monitor then uses this analog information in the form of voltage variations, electron beams and magnetic fields to paint the picture on your monitor screen, resizing it as necessary to fit the display space. The results are similar to an image from a movie projector being thrown onto a wall and being adjusted to fit as required, such as moving the projector closer or further away, and adjusting the focus.

An LCD monitor on the other hand can receive either digital or analog information depending on its connection type, but ultimately all data must be in digital form before it can be used. At resolutions which are lower than its native resolution, the monitor has to digitally rescale an image to fit into its fixed pixel structure. When an image doesn't fit evenly into the native resolution, an LCD monitor can do two things: show the image at its original resolution and put black bars around it to fill in the remaining space; or stretch the image to fit the screen space. The end result is that typically when you use any resolution lower than the maximum (native) resolution, the image will show visual glitches and blurring. This is because the monitor has to Interpolate (recalculate with some guessing) the data to make it all fit into its fixed pixel structure. It's like creating a range of different sized objects out of a fixed number of Lego blocks; some objects will look relatively good, while others will look more blocky.

The bottom line is that digital information is discrete and precise, while analog information is continuous and imperfect. That is why an LCD monitor running at its native resolution shows a much sharper image than a CRT monitor at the same resolution. But once resolutions are changed, the LCD monitor has a harder time rearranging everything to fit properly, while a CRT monitor has no trouble at all.

Aspect Ratio

The ratio of the pixel width of any given resolution to its pixel height is called the Aspect Ratio. For example, the aspect ratio for a resolution of 1920x1200 is calculated first as width divided by height (1,920/1,200) = 1.6. To then express this as a proper aspect ratio, find the first integer (whole number) which, when mulitplied by this ratio, results in a whole number. The first integer which turns 1.6 into a whole number is 5. Multiply 1.6 by 5, and you have 8. Thus the aspect ratio is 8:5, which can also be expressed as 16:10. Similarly, a resolution of 1280x720 results in a width to height of roughly 1.78. The first integer which turns this into a whole number is 9, and 9 x 1.78 = 16, so the aspect ratio is 16:9. The standard aspect ratio for traditional computer monitors and televisions used to be 4:3 or 5:4, while the standard aspect ratio for the current widescreen TV and PC monitor format is 16:9 or 16:10. Resolutions which precisely match the aspect ratio of your monitor will display correctly, while those that do not have the same aspect ratio will either result in distortions to the image, or black bars to the sides and/or top and bottom of the screen.

Almost all LCD monitors now being sold are classed as 'widescreen', as the traditional 4:3 monitors have been phased out. But in actuality there are two different widescreen aspect ratios for computer monitors: 16:9 and 16:10. Up till now, the separation has been reasonably clear: 16:9 is considered a movie aspect ratio, used for displays primarily intended for TV/movie playback such as Plasma and LCD TVs. The 16:10 ratio on the other hand is considered a PC-exclusive aspect ratio, and has been used almost solely for LCD computer monitors. In recent times however, more and more computer monitors are being released as 16:9. This isn't necessarily due to more people using their computer monitors for watching movies, it's actually because the hardware manufacturers find the production of 16:9 panels to be more cost efficient for them, as pointed out in this article. What this means is that 16:10 LCD monitors are being deliberately phased out in favour of 16:9.

The 16:10 aspect ratio was a compromise designed specifically for PCs to allow them more vertical room than a 16:9 monitor. Browsing the Internet for example usually benefits from more height than width, as web pages are not designed to efficiently use horizontal space, and more height means less scrolling to read text. The same is true for word processing, since the standard page formats of A4 and Letter have much more height than width. Yet when a 16:9 image is displayed on a 16:10 monitor, there isn't any major issue; while maintaining native resolution, small black bars are simply added to the top and bottom of the screen, without any major loss in image size. However a 16:9 monitor cannot easily accommodate a 16:10 image for obvious reasons, and thus must step down a resolution or two to show the image in its entirety, adding black bars to the sides in the process. In short, at the same screen size, a 16:10 monitor provides more screen real estate. For those who use their PCs for normal desktop tasks such as browsing and writing emails and documents, 16:10 is therefore a better choice at most screen sizes. However if you still prefer a 16:9 monitor, or only have the choice of a 16:9 monitor, then it doesn't make a substantial difference because manufacturers are pushing consumers towards 16:9 and hence support for 16:9 resolutions will only continue to improve as it is the standard.

Importantly, there are various scaling options available in your graphics card control panel and/or monitor options which control how an image is displayed if it does not match aspect ratio of your monitor. The details are provided under 'Adjust Desktop Size and Position' section on this page of my Nvidia Forceware Tweak Guide, and on this page of my ATI Catalyst Tweak Guide under 'Digital Panel Properties'. You can both increase image quality by forcing your graphics card to rescale an image before sending it to your monitor, and also fix any aspect ratio-related problems - such as a 'squished' image - using these settings.

Interlace & Progressive

Something I haven't covered yet are the concepts of Interlace and Progressive. This is not an issue of any major concern on PC monitors, as all of them use progressive which is optimal, and all modern games send progressive data to your monitor. However since more and more people are hooking up their PCs to a High Definition TV (HDTV), this is an area you should understand more clearly.

Without getting into a huge amount of detail, interlacing is a compromise trick developed for traditional CRT TVs early on to allow them to use the existing amount of information to display higher resolution images and also double their refresh rate from 30 to 60Hz to prevent noticeable flickering. It does this by alternately displaying all the odd-numbered horizontal lines in an image, then displaying the even ones, and back again. Our eyes, due to persistence of vision, do not notice the gaps - we see an entire image made up of odd and even lines. Interlace is not as optimal as progressive scanning, which basically draws the entire image on screen as a single completed frame without breaking it up into these odd and even fields. A whole progressive frame displayed at 60Hz provides smoother image quality than interlaced fields displaying at 60Hz.

So when using an HDTV you may see resolutions denoted as 1080p, 1080i, 720p, or 480p for example. The numerical part indicates the pixel height of the screen, and the i or p after the numbers denotes interlace or progressive. In general the same resolution looks better using progressive (if supported on your display), and on fixed-pixel displays like LCD or Plasma HDTV, the closer the pixel height is to your display's native resolution, the better it will look, as otherwise the image has to be rescaled, and as covered before, rescaled images can result in a reduction in image quality.

Resolution Tips

Let's take some time to consider how we can use the information above to enhance gaming. For starters, higher resolutions typically bring with them a reduction in the jaggedness of outlines in computer graphics. This is precisely what Antialiasing tries to do as well. So it is often wise - especially on systems with fast graphics cards combined with slower CPUs - to consider raising your resolution instead of using higher levels of antialiasing to get a performance boost with no real drop in image quality.

For LCD monitor owners, there is a simple trick you can use to boost performance and also get some 'free' antialiasing: that is to run a game just below your native resolution. For example, running a game at 1680x1050 on a 1920x1200 LCD may sound odd at first, but aside from the FPS boost you get from lowering your resolution, the slight blurring from running at a non-native resolution reduces the harshness of jagged lines, courtesy of your monitor's attempts at rescaling the image with interpolation. This is not as good as real antialiasing, but it's worth a try if you need the extra FPS and have no other option.

In general however, for optimal image quality you should increase any resolution setting to the maximum possible - whether in a game, or in Windows - as this matches your LCD monitor's native resolution and provides the crispest possible image.

|

|

This work is licensed under a Creative Commons Attribution 4.0 International License.