GeForce GTX 680

Note that this is an archived copy of the original article. Please see here for a more detailed explanation.

March 22nd, 2012

By James Wang

From Fermi to Kepler

Every two years or so, NVIDIA engineers set out to design a new GPU architecture. The architecture defines a GPU's building blocks, how they're connected, and how they work. The architecture is the basis not just for a single chip but a family of chips that serves a whole spectrum of systems, from high performance PCs to wafer thin notebooks, from medical workstations to supercomputers. It is the blueprint for every NVIDIA GPU for the next two years.

Just over two years ago, NVIDIA launched the Fermi architecture with the GeForce GTX 480. Named after the Italian nuclear physicist Enrico Fermi, this new architecture brought two key advancements. First, it brought full geometry processing to the GPU, enabling a key DirectX 11 technique called tessellation with displacement mapping. Used in games such as Battlefield 3 and Crysis 2, this technique greatly improves the geometric realism of water, terrain, and game characters. Second, Fermi greatly improved the GPU's performance in general computation, and today, Fermi GPUs power three out of the top five supercomputers in the world.

Today, NVIDIA is launching Kepler, the much anticipated successor to the Fermi architecture. With Kepler we wanted not only to build the world's fastest GPU, but also the world's most power efficient. Feature wise, we added new technologies that fundamentally improve the smoothness of each frame and the richness of the overall experience.

Why Is Power Efficiency Important?

When we first launched Fermi with the GeForce GTX 480, people told us how much they loved the performance, but they also told us they wished it consumed less power. Gamers want top performance, but they want it in a quiet, power efficient form factor. The feedback we received from Fermi really drove this point home. With Kepler, one of our top priorities was building a flagship GPU that was also a pleasure to game with.

Kepler introduces two key changes that greatly improve the GPU's efficiency. First, we completely redesigned the streaming multiprocessor, the most important building block of our GPUs, for optimal performance per watt. Second, we added a feature called GPU Boost that dynamically increases clock speed to improve performance within the card's power budget.

Kepler's new SM, called SMX, is a radical departure from past designs. SMX eliminates the Fermi "2x" processor clock and uses the same base clock across the GPU. To balance out this change, SMX uses an ultra wide design with 192 CUDA cores. With a total of 1536 cores across the chip, the GeForce GTX 680 handily outperforms the GeForce GTX 580.

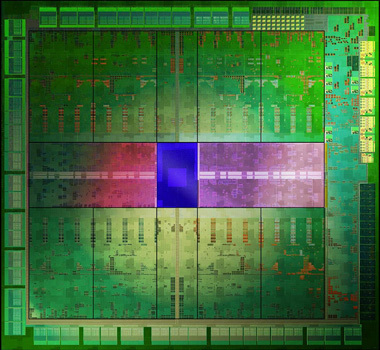

The GeForce GTX 680 GPU is made up of 3.54 billion transistors. The entire chip has been designed for optimal performance per watt.

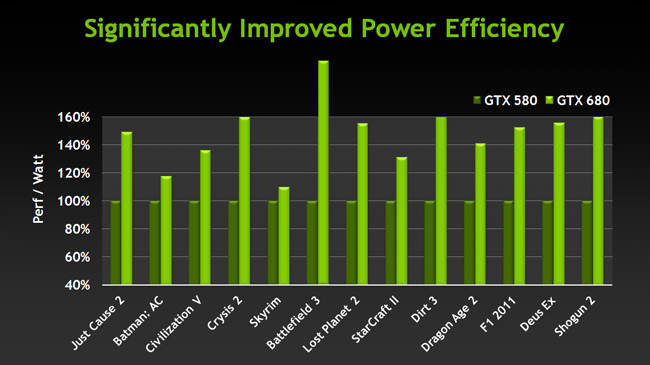

But what's benefited the most is power efficiency. Compared to the original Fermi SM, SMX has twice the performance per watt. Put another way, given a watt of power, Kepler's SMX can do twice the amount of work as Fermi's SM. And this is measured apples-to-apples, on the same manufacturing process. Imagine a conventional 50 watt light bulb that shines as brightly as a 100 watt light bulb—that's what Kepler's like when gaming.

The GeForce GTX 680 is significantly more power efficient than its predecessor. For the gamer, this translates into a cooler, quieter experience, and less overall power draw.

The benefit of this power improvement is most obvious when you plug in a GeForce GTX 680 into your system. If you've owned a high end graphics card before, you'll know that it requires an 8-pin and 6-pin PCI-E power connector. With the GTX 680, you need just two 6-pin connectors. This is because the card draws at most 195 watts of power, compared to 244 watts on the GeForce GTX 580. If there ever was a middle weight boxer that fought like a heavy weight that would be the GeForce GTX 680.

GPU Boost

SMX doubled the performance per watt, but what if the GPU wasn't actually using its full power capacity? Going back to the light bulb analogy, what if a 100 watt light bulb was sometimes running at 90 watts, or even 80 watts? As it turns out, that's exactly how GPUs behave today.

The reason for this is actually pretty simple. Like light bulbs, GPUs are designed to operate under a certain wattage. This number is called the thermal design point, or TDP. For a high-end GPU, the TDP has typically been about 250 watts. You can interpret this number as saying: this GPU's cooler can remove 250 watts of heat away from the GPU. If it goes over this limit for an extended period of time, the GPU will be forced to throttle down its clock speed to prevent overheating. What this also means is, to get the maximum performance, the GPU should operate close to its TDP, without ever exceeding it.

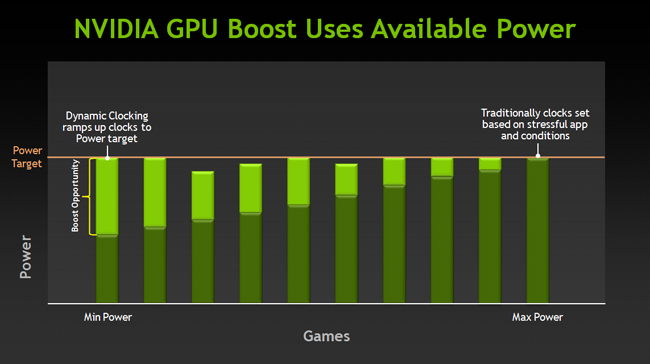

In reality, GPUs rarely reach their TDP when playing even the most intensive 3D games. This is because different games consume different amounts of power and the GPU's TDP is measured using the worst case. Popular games like Battlefield 3 or Crysis 2 consume far less power than a GPU's TDP rating. Only a few synthetic benchmarks can push GPUs to their TDP limit.

For example, say your GPU has a TDP of 200 watts. What this means is that in the worst case, your GPU will consume 200 watts of power. If you happen to be playing Battlefield 3, it may draw as little as 150 watts. In theory, your GPU could safely operate at a higher clock speed to tap into this available headroom. But since it doesn't know the power requirements of the application ahead of time, it sticks to the most conservative clock speed. Only when you quit the game does it reduce to a lower clock speed for the desktop environment.

GPU Boost changes all this. Instead of running the GPU at a clock speed that is based on the most power hungry app, GPU Boost automatically adjusts the clock speed based on the power consumed by the currently running app. To take our Battlefield 3 example, instead of running at 150 watts and leaving performance on the table, GPU Boost will dynamically increase the clock speed to take advantage of the extra power headroom.

Different games use different amounts of power. GPU Boost monitors power consumption in realtime and increases the clock speed when there's available headroom.

How It Works

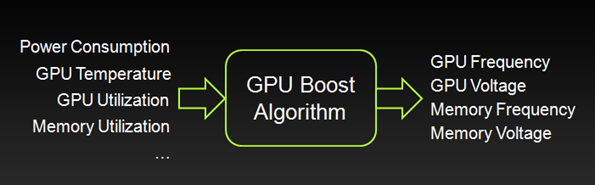

The most important thing to understand about GPU Boost is that it works through real time hardware monitoring as opposed to application based profiles. As an algorithm, it attempts to find what is the appropriate GPU frequency and voltage for a give moment in time. It does this by reading a huge swathe of data such as GPU temperature, hardware utilization, and power consumption. Depending on these conditions, it will raise the clock and voltage accordingly to extract maximum performance within the available power envelop. Because all this is done via realtime hardware monitoring, GPU Boost requires no application profiles. As new games are released, even if you don't update your drivers, GPU Boost "just works."

The GPU Boost algorithm takes in a variety of operating parameters and outputs the optimal GPU clock and voltage. Currently it does not alter memory frequency or voltage though it has the option to do so.

How Much Boost?

Because GPU Boost happens in realtime and the boost factor varies depending on exactly what's being rendered, it's hard to pin the performance gain down to a single number. To help clarify the typical performance gain, all Kepler GPUs with GPU Boost will list two clock speeds on its specification sheet: the base clock and the boost clock. The base clock equates to the current graphics clock on all NVIDIA GPUs. For Kepler, that's also the minimum clock speed that the GPU cores will run at in an intensive 3D application. The boost clock is the typical clock speed that the GPU will run at in a 3D application.

For example, the GeForce GTX 680 has a base clock of 1006 MHz and a boost clock of 1058 MHz. What this means is that in intensive 3D games, the lowest the GPU will run at is 1006 MHz, but most of the time, it'll likely run at around 1058 MHz. It won't run exactly at this speed--based on realtime monitoring and feedback, it may go higher or lower, but in most cases it will run close to this speed.

GPU Boost doesn't take away from overclocking. In fact, with GPU Boost, you now have more than one way to overclock your GPU. You can still increase the base clock just like before and the boost clock will increase correspondingly. Alternatively, you can increase the power target. This is most useful for games that are consuming near 100% of this power target.

Genuinely Smoother Gameplay

Despite the gorgeous graphics seen in many of today's games, there are still some highly distracting artifacts that appear in gameplay despite our best efforts to suppress them. The most jarring of these is screen tearing. Tearing is easily observed when the mouse is panned from side to side. The result is that the screen appears to be torn between multiple frames with an intense flickering effect. Tearing tends to be aggravated when the framerate is high since a large number of frames are in flight at a given time, causing multiple bands of tearing.

An example of screen tearing in Battlefield 3.

Vertical sync (V-Sync) is the traditional remedy to this problem, but as many gamers know, V-Sync isn't without its problems. The main problem with V-Sync is that when the framerate drops below the monitor's refresh rate (typically 60 fps), the framerate drops disproportionately. For example, dropping slightly below 60 fps results in the framerate dropping to 30 fps. This happens because the monitor refreshes at fixed internals (although an LCD doesn't have this limitation, the GPU must treat it as a CRT to maintain backward compatibility) and V-Sync forces the GPU to wait for the next refresh before updating the screen with a new image. This results in notable stuttering when the framerate dips below 60, even if just momentarily.

When V-Sync is enabled and the framerate drops below the monitor's refresh rate, the framerate disproportionately, causing stuttering.

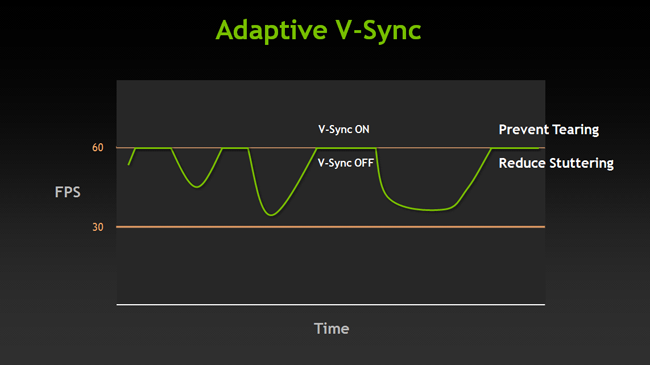

With NVIDIA's new Release 300 drivers, we are introducing a new option in the control panel called Adaptive V-Sync. Adaptive V-Sync combines the benefit of V-Sync while minimizing its downside. With Adaptive V-Sync, V-Sync is enabled only when the framerate exceeds the refresh rate of the monitor. When the framerate drops below this rate, V-Sync is automatically disabled, minimizing stuttering in games.

Adaptive V-Sync dynamically turns V-Sync on and off to maintain a more stable framerate.

By dynamically switching V-Sync on and off based on in-game performance, Adaptive V-Sync makes V-Sync a much more attractive option, especially for gamers who put a high premium on smooth framerates.

FXAA: Anti-Aliasing at Warp Speed

Nothing ruins a beautiful looking game like jaggies. They makes straight lines look crooked and generate distracting crawling patterns when the camera is in motion. The fix for jaggies is anti-aliasing, but today's method of doing anti-aliasing is very costly to frame rates. To make matters worse, their effectiveness at removing jaggies has diminished in modern game engines.

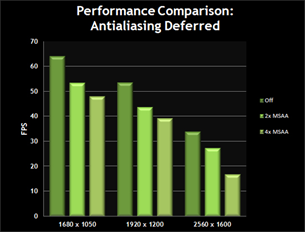

Almost all games make use of a form of antialiasing called multi-sample anti-aliasing (MSAA). MSAA renders the screen at an extra high resolution then down samples the image to reduce the appearance of aliasing. The main problem with this is technique is that it requires a tremendous amount of video memory. For example, 4x MSAA requires four times the video memory of standard rendering. In practice, a lot of gamers are forced to disable MSAA in order to maintain reasonable performance.

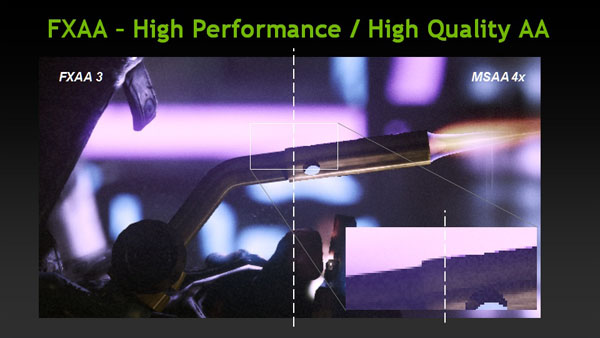

FXAA is a new way of performing antialiasing that's fast, effective, and optimized for modern game engines. Instead of rendering everything at four times the resolution, FXAA picks out the edges in a frame based on contrast detection. It then smoothes out the aliased edges based on their gradient. All this is a done as a lightweight, post processing shader.

FXAA is not only faster than 4xMSAA, but produces higher quality results in game engines that make use of extensive post processing. Click here for an interactive comparison.

Compared to 4xMSAA, FXAA produces comparable if not smoother edges. But unlike 4xMSAA, it consumes no additional memory and runs almost as fast as no antialiasing. FXAA has the added benefit that it works on transparent geometry such as foliage and helps reduce shader based aliasing that often appears on shiny materials.

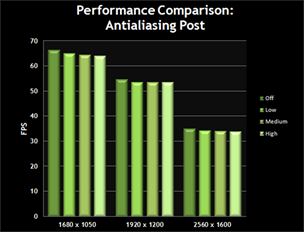

Comparison of MSAA (Antiliasing Deferred) vs. FXAA (Antialiasing Post) performance in Battlefield 3. Click to enlarge.

While FXAA is available in a handful of games today, with the R300 series driver, we've integrated it into the control panel. This means you'll be able to enable it in hundreds of games, even legacy titles that don't support anti-aliasing.

TXAA: Even Higher Quality Than FXAA

Computer generated effects in films spend a huge amount of computing resources on anti-aliasing. For games to truly approach film quality, developers need new antialiasing techniques that bringing even higher quality without compromising performance.

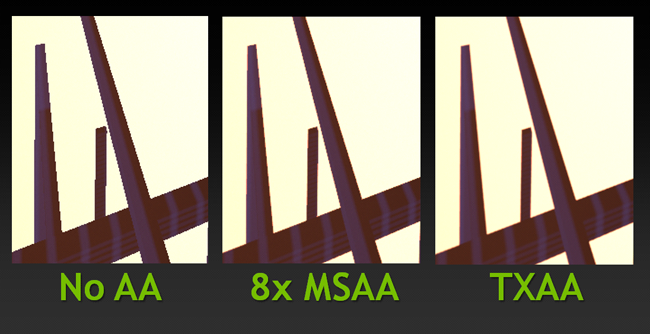

With Kepler, NVIDIA has invented an even higher quality AA mode called TXAA that is designed for direct integration into game engines. TXAA combines the raw power of MSAA with sophisticated resolve filters similar to those employed in CG films. In addition, TXAA can also jitter sample locations between frames for even higher quality.

TXAA is available with two modes: TXAA 1, and TXAA 2. TXAA 1 offers visual quality on part with 8xMSAA with the performance similar to 2xMSAA, while TXAA 2 offers image quality that’s superior to 8xMSAA, with performance comparable to 4xMSAA.

TXAA 2 performs similarly to 4xMSAA, but produces higher quality results than 8xMSAA.

Like our FXAA technology, TXAA will be first integrated directly into the game engine. The following games, engines, and developers have committed to offering TXAA support: MechWarrior Online, Secret World, Eve Online, Borderlands 2, Unreal Engine 4, BitSquid, Slant Six Games, and Crytek.

NVIDIA Surround on a Single GeForce GTX 680

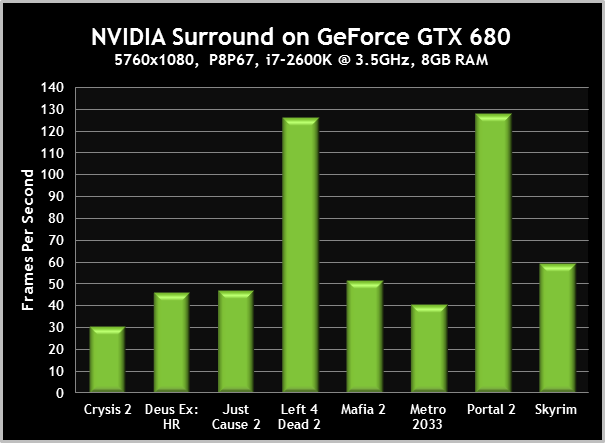

Nothing is as thrilling as playing your favorite games across three monitors. At 5760 x 1080, the expanded field of view fully engages your peripheral vision and provides a super immersive experience in racing and flight simulators. Previously, two NVIDIA GPUs were required to support three monitors. On Kepler, one card powers three monitors for gaming, with the option for a forth monitor for web or IM.

NVIDIA's Kris Rey playing Skyrim across three monitors while referencing the Skyrim tweak guide on GeForce.com.

When three-monitor setups were first introduced, their cost was considered prohibitive. Today, you can buy a quality high definition panel for under $150. Starting out from just one monitor, it costs less than $300 to setup a surround system like the one above (minus the accessory panel). And with a GeForce GTX 680, you can power it from just a single graphics card.

A single GeForce GTX 680 is capable of playing most of today's games across three 1080p monitors. For the most demanding titles, SLI is recommended.

Quality Settings:

- Crysis 2, 30.33 FPS: DirectX 11 Ultra Upgrade installed, high-resolution textures enabled, Extreme detail level.

- Deus Ex: Human Revolution, 46.20 FPS: Highest possible settings, tessellation enabled, FXAA High enabled.

- Just Cause 2, 46.60 FPS: Maximum settings, CUDA water enabled, 4xMSAA, 16xAF.

- Left 4 Dead 2, 126.10 FPS: Maximum settings, 4xMSAA, 16xAF.

- Mafia 2, 51.35 FPS: Maximum settings, PhysX Medium enabled, AA enabled, AF enabled.

- Metro 2033, 40.72 FPS: DirectX 11 enabled, Depth of Field disabled, tessellation enabled, PhysX disabled, 4xMSAA, 16xAF.

- Portal 2, 127.90 FPS: Maximum settings, 4xMSAA, 16xAF.

- The Elder Scrolls V: Skyrim, 59.55 FPS: Ultra preset, Bethesda high-resolution texture pack, indoor cave scene.

In case you're wondering, here are some real-world performance results from the GeForce GTX 680, playing games across three 1080p monitors. As you can see, there is no skimping on quality here. Most of the games are being played at high quality, if not maximum.

Conclusion

The GeForce GTX 680 is quite unlike any flagship graphics card we've ever built. We've built the fastest GPU in the world for many generations now. With Kepler, we wanted to do more than just repeat that feat.

Kepler is really about starting from first principles and asking: beyond performance, how can we improve the gaming experience as a whole?

Gamers told us they want GPUs that are cooler, quieter, and more power efficient. So we re-designed the architecture to do just that. The GeForce GTX 680 consumes less power than any flagship GPU since the GeForce 8800 Ultra, yet it outperforms every GPU we or anyone else have ever built.

We wanted to make gaming not just faster, but smoother. FXAA and the new TXAA does both. Games get super smooth edges without tanking the performance.

Adaptive V-Sync improves upon a feature that many gamers swear by. Now you can play with V-sync enabled and not worry about sudden dips in framerate.

Finally, a single GPU now powers a full NVIDIA Surround setup plus an accessory display. There is simply no better way to play a racing game or flight simulator. Add in NVIDIA PhysX technology, and the GTX 680 delivers an amazingly rich gaming experience.

Kepler is the outcome of over four years of research and development by some of the best and brightest engineers at NVIDIA. We hope you'll have as much fun gaming on our new GPU as we had building it.

For more information, please visit the GeForce GTX 680 product page, and if you have any questions please leave a comment below or drop by the GeForce Forums.

Previous

Previous

Comments

This comment section is currently disabled. Click here to enable comments and load all Disqus related resources

Please note that by enabling and loading these Disqus resources, you are agreeing to allow everything that comes with a Disqus comment section, including their cookies and all scripting.

This comment section is currently enabled. Click here to disable comments and unload all Disqus related resources

Please note that I have absolutely zero control over this comment section. It is still administered entirely by Nvidia themselves. I have no moderation power whatsoever and don't necessarily endorse any of the comments posted here. In fact, I don't believe this comment section adds much value to the article to begin with but included it only because it existed on the original.