Anisotropic Filtering

Note that this is an archived copy of the original article. Please see here for a more detailed explanation.

Anisotropic filtering improves the clarity and crispness of textured objects in games. Textures are images containing various types of data such as color, transparency, reflectivity, and bumps (normals) that are mapped to an object and processed by the GPU in order to give it a realistic appearance on your screen. At its native dimensions, however, a typical texture is far too computationally expensive to unconditionally reuse in a scene because the relative distance between the texel (a pixel of a texture) of the object and the camera affects the observable level of detail, which could easily translate to wasted processing time spent on obtaining multiple texture samples that are applied to a disproportionately small surface in the 3D scene. To simultaneously preserve performance and image quality, mipmaps are used; mipmaps are duplicates of a master texture that have been pre-rendered at lower resolutions which a graphics engine can call when a corresponding surface is a specific distance from the camera. With proper filtering, the use of multiple mipmap levels in a scene can have no discernable impact on its appearance while greatly optimizing performance.

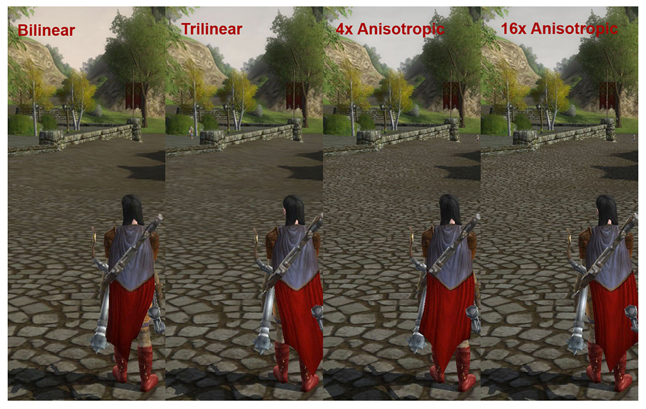

Due to the dimensions of mipmaps conventionally being a power of two or smaller than the original texture, there exist points where multiple mipmaps may be sampled for a single texel which must be compensated for by the filtering method in use to avoid blurring and other visual artifacts. Bilinear filtering serves as the default, being the simplest and computationally cheapest form of texture filtering available due to its simple approach:to calculate a texel's final color, four texel samples are taken from the mipmap defined by the graphics engine at the approximate point where the target texel exists on-screen, which will appear as a combined result of those samples' color data. While this does account for distortions in texture angles, bilinear filtering takes samples exclusively from the mipmap identified by the graphics engine, meaning that any perspective-distorted texture lying at a point where two different mipmap sizes are called results in the displayed texture containing pronounced shifts in clarity. Trilinear filtering, the visually successive method to bilinear filtering, offers smooth transitions between mipmaps by continuously sampling and interpolating (averaging) texel data from the two closest mipmap sizes for the target texel, but this approach along with bilinear filtering both assume that the texture is displayed as square with the camera and thus suffers from quality loss when a texture is viewed at a steep angle. This is due to the texel covering a depth longer than and a width more narrow than the samples extracted from the mipmaps, resulting in blurriness from under- and over-sampling respectively.

Anisotropic filtering exists to provide superior image quality in virtually all cases at the slight expense of performance. By the computer science definition, anisotropy is the quality of possessing dissimilar coordinate values in a space, which applies to any texture not displayed as absolutely perpendicular to the camera. As previously mentioned, bilinear and trilinear filtering suffer from resultant quality loss when the sampled textures are oblique with the camera due to both methods obtaining texel samples from mipmaps assuming that the mapped texel is perfectly square in the rendered space, which is rarely true. This quality loss is also related to the fact that mipmaps are isotropic, or possessing identical dimensions, so when a texel is trapezoidal there is insufficient sampling in both directions. To solve this, anisotropic filtering scales either the height or width of a mipmap by a ratio relative to the perspective distortion of the texture; the ratio is dependent on the maximum sampling value specified, followed by taking the appropriate samples. AF can function with anisotropy levels between 1 (no scaling) and 16, defining the maximum degree which a mipmap can be scaled by, but AF is commonly offered to the user in powers of two: 2x, 4x, 8x, and 16x. The difference between these settings is the maximum angle that AF will filter the texture by. For example: 4x will filter textures at angles twice as steep as 2x, but will still apply standard 2x filtering to textures within the 2x range to optimize performance. There are subjective diminishing returns with the use of higher AF settings because the angles at which they are applied become exponentially rarer.

Anisotropic filtering can be controlled through the NVIDIA Control Panel within the 3D Settings section, however for the best performance and compatibility NVIDIA recommends that users set this to be controlled by the application.

Previous

Previous

Comments

This comment section is currently disabled. Click here to enable comments and load all Disqus related resources

Please note that by enabling and loading these Disqus resources, you are agreeing to allow everything that comes with a Disqus comment section, including their cookies and all scripting.

This comment section is currently enabled. Click here to disable comments and unload all Disqus related resources

Please note that I have absolutely zero control over this comment section. It is still administered entirely by Nvidia themselves. I have no moderation power whatsoever and don't necessarily endorse any of the comments posted here. In fact, I don't believe this comment section adds much value to the article to begin with but included it only because it existed on the original.